Jiaqi Li 李嘉祺

About me

I am currently a Fourth-year undergraduate student in Software Engineering at the School of Software, Tsinghua University. I am a research intern at the AI4CE Lab at New York University and the Litchi Lab at College AI, Tsinghua University, advised by Prof. Chen Feng and Prof. Yiming Li. Previously, I worked with Prof. Jiachen Li at the University of California, Riverside. My research interests lie at the intersection of Spatial Intelligence, 3D vision and Embodied AI. I am actively seeking PhD opportunities for Fall 2026 and always open to communication and collaboration. Please feel free to contact me!

Education

2022.09-2026.06 | B.E. Software Engineering, School of Software, Tsinghua University

Research Experience

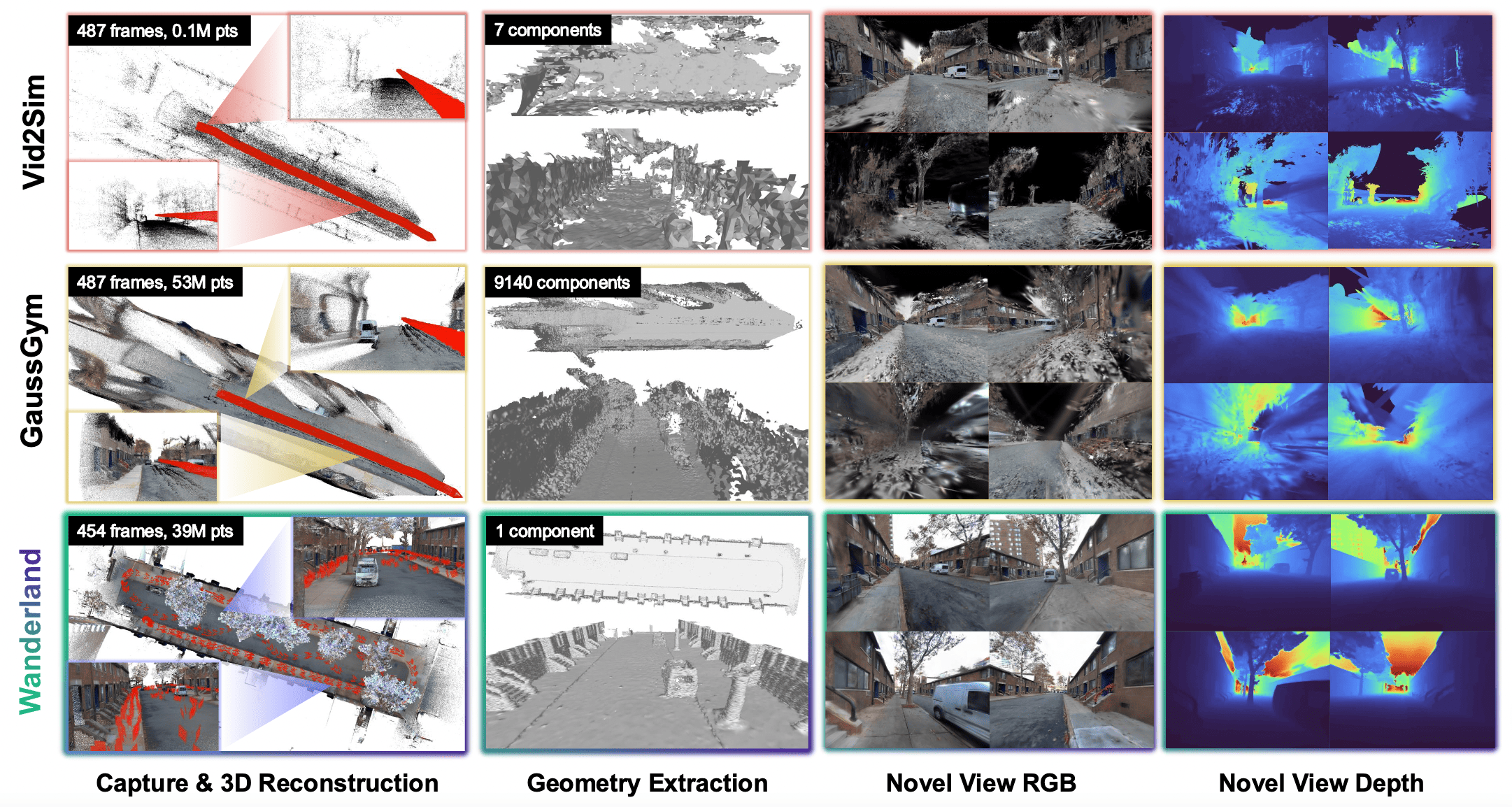

2025.03-2025.11 | Wanderland: Geometrically Grounded Simulation for Open-World Embodied AI I joined the AI4CE Lab at NYU Tandon to work on the Wanderland project under the guidance of Prof. Chen Feng. Wanderland reconstruct large-scale and diverse urban environments using handheld LiDAR–IMU–RGB sensor rigs, training 3D Gaussian Splatting (3DGS) models integrated into Isaac Sim. We investigate whether visual realism alone is sufficient for embodied AI and conclude negatively—trustworthy benchmarking still demands metric-scale geometric grounding that previous pipelines lack. My contributions included designing the data acquisition and reconstruction pipeline, optimizing sensor calibration and trajectory estimation, and evaluating geometric constraints’ impact on reconstruction quality and reinforcement learning-based navigation performance. This experience significantly deepened my understanding of leveraging geometric priors to enhance embodied AI performance in real-world scenarios.

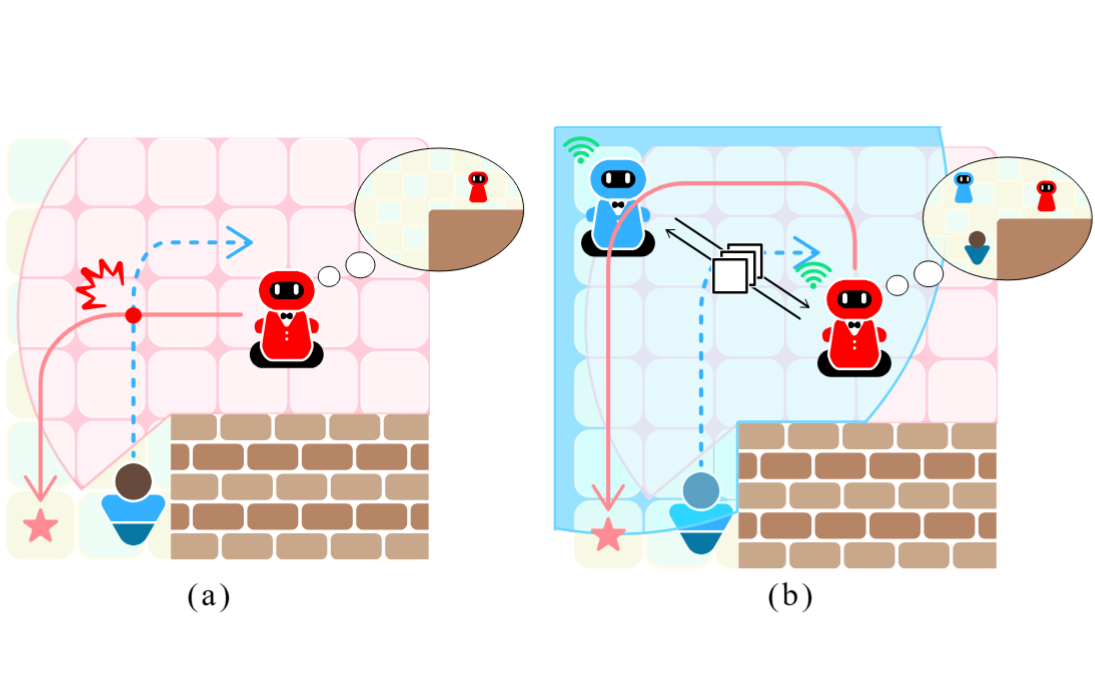

2024.06- 2025.01 | Multi-Robot Social Navigation with Cooperative Occupancy Prediction

I went to the TASL at the University of California, Riverside as a visiting scholar to conduct this research under the guidance of Prof. Jiachen Li. In this project, we integrated cooperative perception methods into a multi-agent reinforcement learning framework to address social navigation tasks using Occupancy Grid Map Prediction (OGMP). Our approach resulted in performance enhancements in navigating complex environments with multiple agents. Through this experience, I gained a understanding of reinforcement learning and social navigation tasks, as well as skills in communicating and leading research within a diverse team.

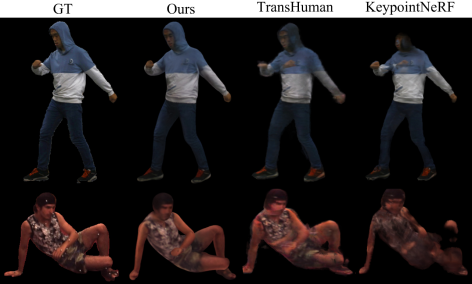

2023.08-2024.05 | Feature Aggregation for Real-time Free-viewpoint Dynamic Human Video from Sparse Views

This project is directed by Prof. Feng Xu and Wenbin Lin, focusing on the reconstruction of real-time human body movements, mesh, and lighting textures from sparse perspectives. Specifically, I utilize a small number of mobile phone videos as input to estimate human posture and render it into a mesh model in real time. The paper related to this project is currently under review.

2023.03-2023.07 | Quality of Experience(QoE) Improvement in Mobile Live Streaming Program

I joined the Student Research Training program: QoE Improvement in Mobile Live Streaming, advised by Prof. Lifeng Sun. In this project, I extensively searched and reviewed relevant literature, and implemented a method for evaluating video streaming hotspots using C++. This method was finally applied to the adaptive bitrate system in our laboratory.

Awards & Scholarships

2024.11 | Awarded Comprehensive Scholarship (~Top 10%) at School of Software, Tsinghua University.

2024.10 | Received the Weng Scholarship at Tsinghua University.

2023.09 | I won the 2023 Hantex Scholarship

Publications

-

PDF Currently under review

PDF Currently under review

Projects & Homework

-

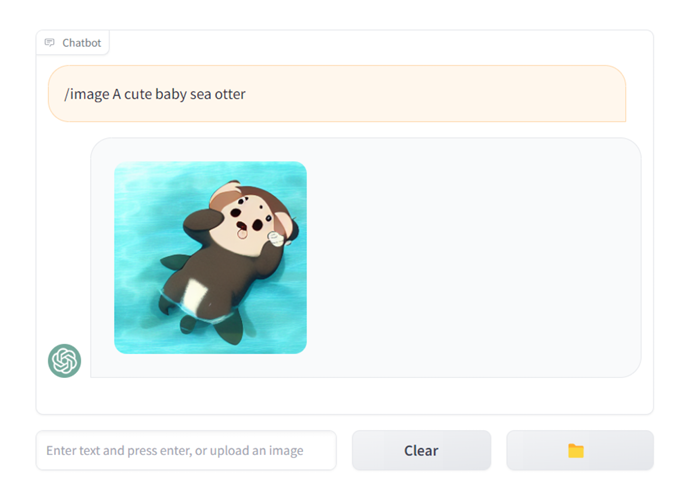

Course Project

Developed a multi-modal AI assistant that integrates language processing, image generation, and speech recognition capabilities using LocalAI and Gradio. The assistant facilitates interactive conversations, generates images from textual prompts, understands and responds to voice commands, summarizes document content, and classifies images. This project provided me a valuable insights into project management and multi-model integration.Code 97/100, A

Course Project

Developed a multi-modal AI assistant that integrates language processing, image generation, and speech recognition capabilities using LocalAI and Gradio. The assistant facilitates interactive conversations, generates images from textual prompts, understands and responds to voice commands, summarizes document content, and classifies images. This project provided me a valuable insights into project management and multi-model integration.Code 97/100, A -

Team Project

This Web program aims to alleviate foreign travelers' language barriers by providing an intuitive interface for dish recommendations, translations, and cultural insights. The core solution addresses the pain point of ordering unfamiliar cuisines or lacking multilingual context when traveling. Leveraging a dish database enhanced by LLM, it delivers context-aware suggestions, translations, and tags (e.g., “Spicy”, “Vegetarian”) in multiple languages to guide user choices. The backend is designed in Django.Code 95/100, A — “Software Innovation & Creativity” Contest 2024, Silver Prize

Team Project

This Web program aims to alleviate foreign travelers' language barriers by providing an intuitive interface for dish recommendations, translations, and cultural insights. The core solution addresses the pain point of ordering unfamiliar cuisines or lacking multilingual context when traveling. Leveraging a dish database enhanced by LLM, it delivers context-aware suggestions, translations, and tags (e.g., “Spicy”, “Vegetarian”) in multiple languages to guide user choices. The backend is designed in Django.Code 95/100, A — “Software Innovation & Creativity” Contest 2024, Silver Prize -

Homework

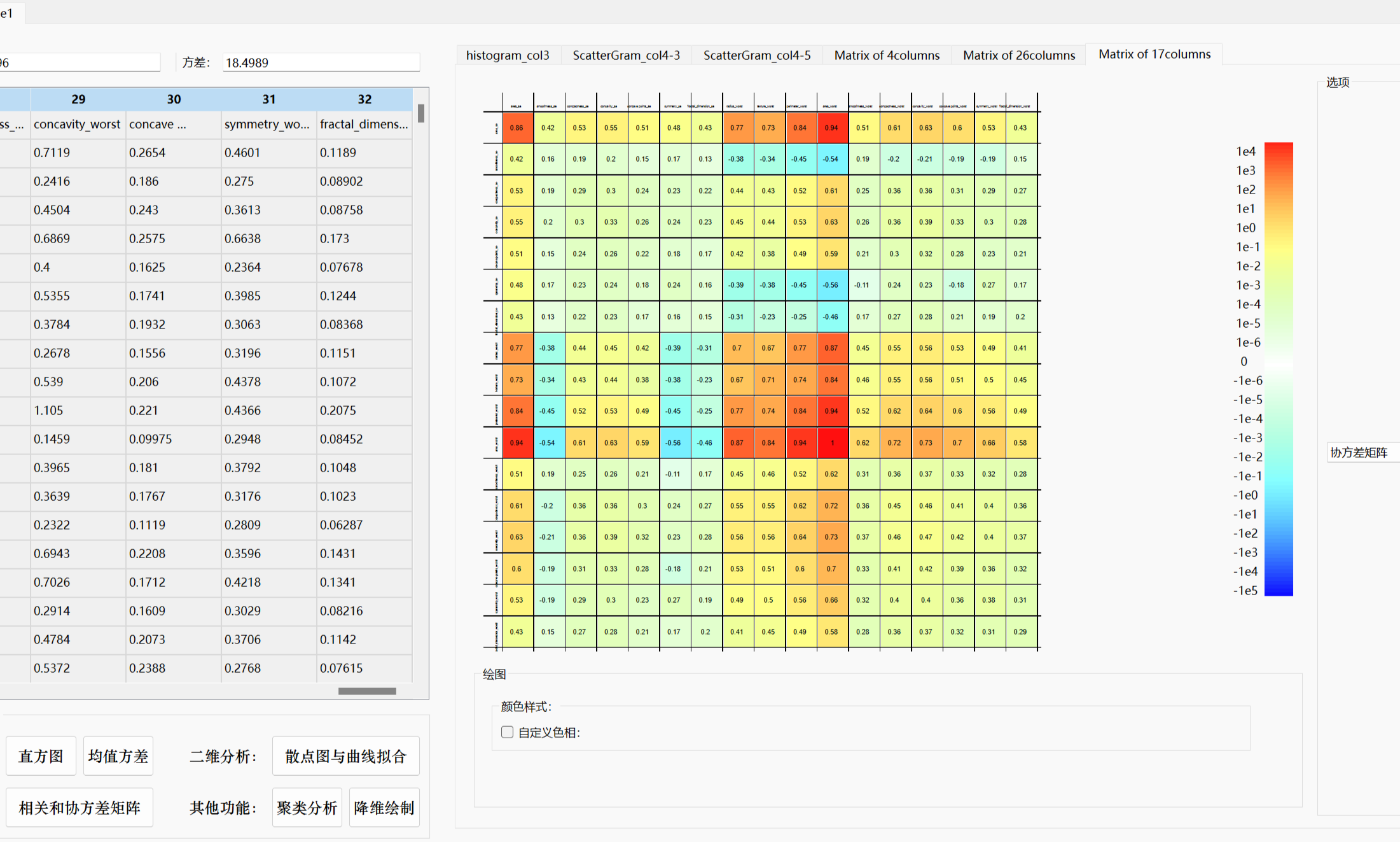

Mediplot is a medical data visualization software developed based on Qt and Eigen, which is a project for my programming training course. This program realizes necessary functions for data analysis such as data import, chart drawing, and cluster analysis.Code 90/100, A-

Homework

Mediplot is a medical data visualization software developed based on Qt and Eigen, which is a project for my programming training course. This program realizes necessary functions for data analysis such as data import, chart drawing, and cluster analysis.Code 90/100, A- -

Homework

My first C++ language homework project implemented a puzzle game similar to the well-known game "Lightbot" through command operations and image file reading and writing.

Homework

My first C++ language homework project implemented a puzzle game similar to the well-known game "Lightbot" through command operations and image file reading and writing.

Interests & Misc

🎺🎵

I am interested in trombone and classical music. I am also a member of TUSO. Here are some videos of our performances:

Tsinghua University New Year Special Concert, 2024.01

TUSO’s 30th Anniversary Concert, 2023.06

✈📷

I like traveling and photography. My profile picture was taken during my trip to Kyoto.

I hope to share my travel experiences here, and you will see them soon…

🤝🌻

I plan to document my volunteer activities here, which you will be able to see soon…

Powered by Jekyll and Minimal Light theme.